In result, we will web scrape the site to get that unstructured website data and put into an ordered form to build our own dataset. Web Scraping (Scrapy) using Python. In order to scrape the website, we will use Scrapy. In short, Scrapy is a framework built to build web scrapers more easily and relieve the pain of maintaining them. Webscraping Tutorial briefly showing how to get the NY lottery winning numbers, events in history and the scrabble word of the day.

There are a lot of hypocritical people that complain about modern life while benefiting enormously from it every day… I know this is true because I am one of those people.

In a lot of ways people had it better in the past. They could discover new continents, invent the theory gravity, and drive without seatbelts.

The American expansion into the West was one of those golden periods of time. Cowboys riding around, miles and miles of open range, tuberculosis—what more could anyone ask for?

Let’s use Python and some web scraping techniques to download images. Update 2 (Feb 25, 2020): One of the problems with scraping w ebpages is that the target elements depend on the a selector of some sort.

I am a fan of Western films. Those films captured the West with all the cool shootouts and uniquely western landscapes. Sure, they are romanticized but that is what I like. While watching a spaghetti western I wondered where these events were supposed to be taking place.

The question in my head became, “According to Western films, where is the West?” We can answer that. We have the technology. After a few false starts I got a working process together:

- Find a bunch of lists of Western films on Wikipedia

- Scrape the film titles

- Run those film titles by Wikipedia to see if we can find their plots

- Use a natural language processor (NLP) to pull place names from the plots

- Run the place names through Wikipedia again to remove junk place names

- Geocode the place names and load it all into a spatially enabled dataframe

- Map it

Let’s take the example of the 1952 film Cripple Creek. First, we get the title.

Next, we use the title to find the plot on its Wikipedia page.

After running the plot through the NLP, we get the following “GPE” entities.

Next, we geocode these addresses to get X and Y coordinates and read them into a pandas dataframe.

We see Cripple Creek, CO and Texas mapped from the plot of the film Cripple Creek.

The notebook is broken up as follows:

Import packages

Search Plots with Natural Language Processor for Place Names

This is a map of the West according to our definition and process.

A lot of the hotspots seem to occur from many mentions of states like Texas, Colorado, Kansas, and California. But a lot of cities and towns made it through as well.

To start 1995 titles were collected. From these we found over 1750 movie plots. This became about 3750 potential place names. Those got filtered down to around 1750 by checking their Wikipedia entries. Finally, 1000 or so points made it to the end to be plotted.

Plotting the centroid, we find {36.35037924014938, -106.2693241648988} which sits neatly in north-central New Mexico.

If you ever make it on Jeopardy and the answer “The centroid of the American West as defined by geocoding place names mentioned in the plots of Western films between 1920 and 1969” comes up do not respond “Carson National Forest” or you will get it wrong. You need to answer in the form of a question on Jeopardy.

The Jupyter notebook can be found here. Microsoft word for free mac.

- 1LibriVox

- 2Listen

- 2.2Finding Audiobooks

- 3Volunteer

- 3.1Where to Start

- 3.3Reader (Narrator)

About

LibriVox is a hope, an experiment, and a question: can the net harness a bunch of volunteers to help bring books in the public domain to life through podcasting?

LibriVox volunteers record chapters of books in the public domain, and then we release the audio files back onto the net. We are a totally volunteer, open source, free content, public domain project.

Policies

Copyright

Listening to the files

See also: How To Get LibriVox Audio Files

Finding Audiobooks

Recommendations

Searching

Lists & Indexes

Other resources for listeners

- (In another language: Français: Comment devenir benevole)

LibriVox volunteers narrate, proof listen, and upload chapters of books and other textual works in the public domain. These projects are then made available on the Internet for everyone to enjoy, for free.

There are many, many things you can do to help, so please feel free to jump into the Forum and ask what you can do to help!

See also: How LibriVox Works

Where to Start

Most of what you need to know about LibriVox can be found on the LibriVox Forum and the FAQ. LibriVox volunteers are helpful and friendly, and if you post a question anywhere on the forum you are likely to get an answer from someone, somewhere within an hour or so. So don't be shy! Many of our volunteers have never recorded anything before LibriVox.

Types of Projects

We have three main types of projects:

- Collaborative projects: Many volunteers contribute by reading individual chapters of a longer text.

- We recommend contributing to collaborative projects before venturing out to solo projects.

- Dramatic Readings and Plays: contributors voice the individual characters. When complete, the editor compiles them into a single recording

- Solo projects: One experienced volunteer contributes all chapters of the project.

Proof Listener (PL)

Not all volunteers read for LibriVox. If you would prefer not to lend your voice to LibriVox, you could lend us your ears. Proof listeners catch mistakes we may have missed during the initial recording and editing process.

Reader (Narrator)

Readers record themselves reading a section of a book, edit the recording, and upload it to the LibriVox Management Tool.

For an outline of the Librivox audiobook production process, please see The LibriVox recording process.

One Minute Test

We require new readers to submit a sample recording so that we can make sure that your set up works and that you understand how to export files meeting our technical standards. We do not want you to waste previous hours reading whole chapters only to discover that your recording is unusable due to a preventable technical glitch.

- (In another language: Deutsch, Español, Francais, Italiano, Portugues)

Record

- (In another language: Deutsch, Español, Francais, Nederlands, Português, Tagalog, 中文)

Recording Resources: Non-Technical

- LibriVox disclaimer in many languages

Recording Resources: Technical

Dramatic Readings and Plays

Book Coordinator (BC)

What Is Web Scraping

A book coordinator (commonly abbreviated BC in the forum) is a volunteer who manages all the other volunteers who will record chapters for a LibriVox recording.

Metadata Coordinator (MC)

Metadata coordinators (MCs), help and advise Book Coordinators, and take over the files with the completed recordings (soloists are also Book Coordinators in this sense, as they prepare their own files for the Meta coordinators). The files are then prepared and uploaded to the LibriVox catalogue, in a lengthy and cumbersome process.

More info:

Graphic Artist

Volunteer graphic artists create the album cover art images shown in the catalog.

Resources and Miscellaneous

Resources

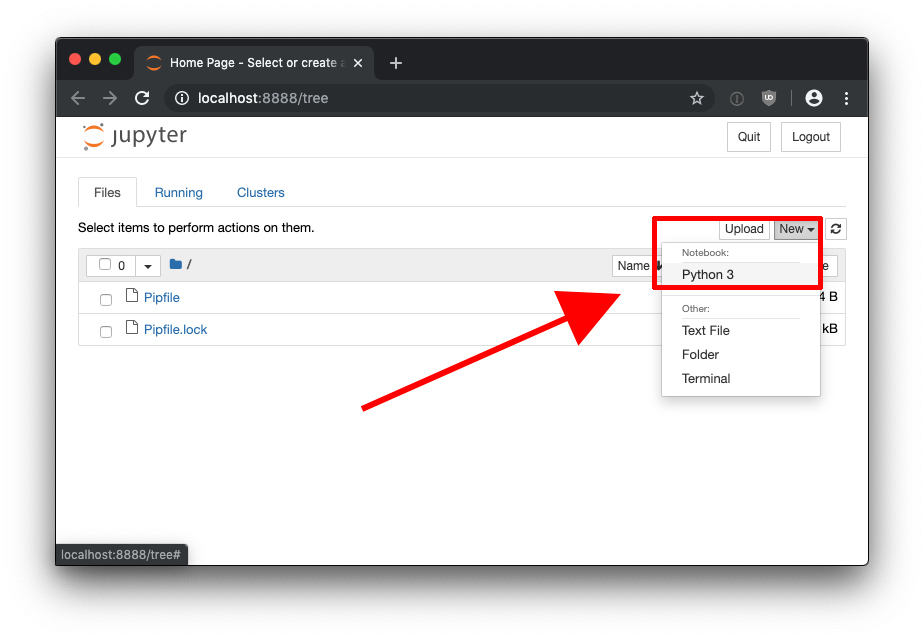

Jupyter Notebook Web Scraping Download

How to Edit the Librivox Wiki

NOTE: Anyone may read this Wiki, but if you wish to edit the pages, please log in, as this Wiki has been locked to avoid spam. Apologies for the inconvenience.

- If you need to edit the Wiki, please request a user account, with a private mail (PM) to one of the admins: dlolso21, triciag, or knotyouraveragejo.

- You will be given a username (same as your forum name) and a temporary password. Please include your email address in your PM.

Web Scraping With Python